Classification using Weka – Part 4

If you haven’t read the previous parts of this article:

Required dependencies

To start using Weka, the following dependency has to be imported:

<dependency>

<groupId>nz.ac.waikato.cms.weka</groupId>

<artifactId>weka-stable</artifactId>

<version>3.6.14</version>

</dependency>

Classifier class

In the previous part, we’ve already created a class for creating .arff configuration. So, it’s time to create a classifier that processes that configuration.

public class WekaClassifier {

private final Classifier classifier;

private final Instances instances;

private final Configuration configuration;

public WekaClassifier(@NotNull Classifier classifier, @NotNull Configuration configuration) throws Exception {

this.classifier = classifier;

this.configuration = configuration;

this.instances = createInstances(configuration.toString());

classifier.buildClassifier(instances);

}

public Configuration getConfiguration() {

return configuration;

}

/**

* Evaluates classifier accuracy

* @return String with classifier evaluation accuracy details

* @throws Exception

*/

public String evaluate() throws Exception {

Evaluation eval = new Evaluation(instances);

eval.evaluateModel(classifier, instances);

return eval.toSummaryString();

}

/**

* Creates Weka instances from the passed configuration

* @param input configuration in .arff format

* @throws IOException

*/

private Instances createInstances(@NotNull String input) throws IOException {

Instances instances = new Instances(new StringReader(input));

instances.setClassIndex(instances.numAttributes()– 1);

return instances;

}

/**

* Returns a classification result for passed instance.

* Classification result contains information about similarity percentage to the expected class

* @return Classification result for the passed instance

* @throws Exception

*/

public ClassificationResult getClassForInstance(@NotNull FeatureSample sample) throws Exception {

List < Double > values = sample.getFeatures();

int instanceElementsNum = values.size() + 1;

Instance instance = new Instance(instanceElementsNum);

for (int pos = 0; pos < instanceElementsNum– 1; pos++) {

if (values.get(pos) == null) {

instance.setValue(pos, Instance.missingValue());

} else {

instance.setValue(pos, values.get(pos));

}

}

instance.setDataset(instances);

double classValue = classifier.classifyInstance(instance);

double[] distr = classifier.distributionForInstance(instance);

double probability = distr[(int) classValue];

return new ClassificationResult(classValue, probability);

}

}

So, we’ve got a wrapper for Weka classifier that allows us to classify instance and evaluate classifier. Details of how to use it will be covered later in a simple application.

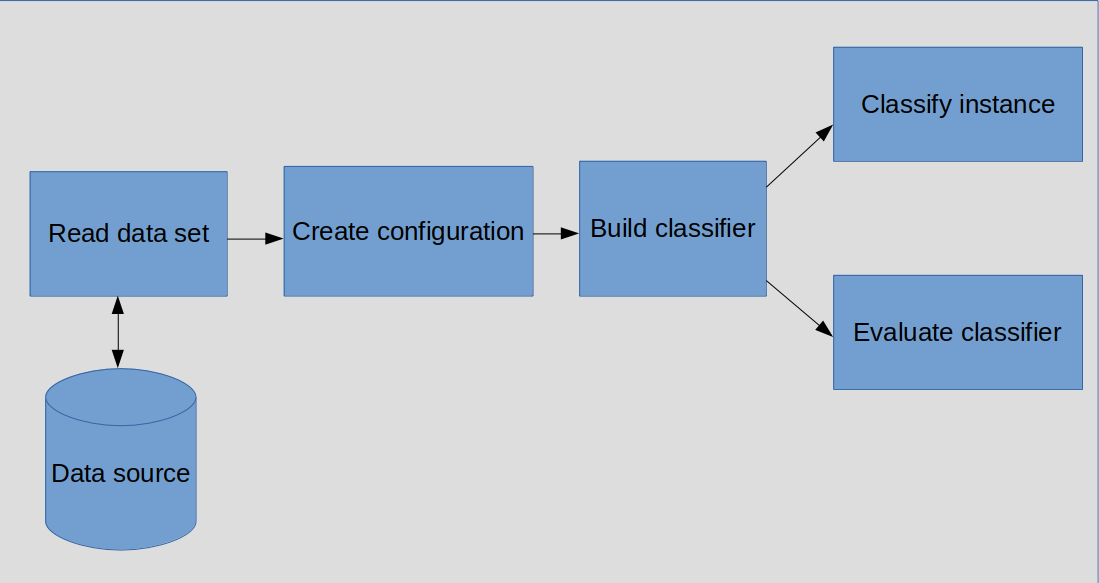

Let’s have a look at the sequence of required actions to perform classification.

Classification application

Let’s create a simple classification application that performs steps mentioned above. I’m not going to put here every class, this article would be endless, only the most important logic. The rest of the functionality you may find in the attached archive with a complete project.

public class ClassificationApp {

public static void main(String[] args) throws Exception {

run();

}

private static void run() throws Exception {

WekaClassifier classifier = makeClassifier();

System.out.println(classifier.evaluate());

FeatureSample testSample = new FeatureSample(

Arrays.asList(6.6, 2.9, 4.6, 1.3), Optional. < Integer > absent()

);

ClassificationResult result = classifier.getClassForInstance(testSample);

System.out.println(“Instance has been classified as” + toIrisType(result.getClassValue()) + ”with probability” + result.getProbability() * 100 + “ % .”);

}

@NotNull

private static WekaClassifier makeClassifier() {

WekaClassifier classifier;

try {

classifier = new WekaClassifier(ClassifierFactory.randomForest(), makeConfiguration().build());

} catch (Exception e) {

throw new RuntimeException(e);

}

return classifier;

}

private static Configuration.Builder makeConfiguration() {

List < FeatureSample > samples = IrisDatasetReader.collect(WekaClassifier.class.getResourceAsStream(“/iris.data.txt”));

List < Integer > classValues = new ArrayList < > ();

for (int i = 0; i < IrisType.values().length; i++) {

classValues.add(i);

}

Configuration.Builder confBuilder = new Configuration.Builder()

.name(“iris - classification”)

.attribute(“sepalLength”)

.attribute(“sepalWidth”)

.attribute(“petalLength”)

.attribute(“petalWidth”)

.classAttribute(“type”, classValues);

for (FeatureSample sample: samples) {

confBuilder.instance(sample.getFeatures(), sample.getClassValue().get());

}

return confBuilder;

}

private static IrisType toIrisType(double classValue) {

return IrisType.forValue((int) classValue);

}

}

As you can see, all we need is to set a configuration name, attributes in correct order and all available classes. After that we set all available data instances which are used to teach a classifier and build it. That’s all. At this point we can perform classification passing instances to the built classifier.

Classifier evaluation

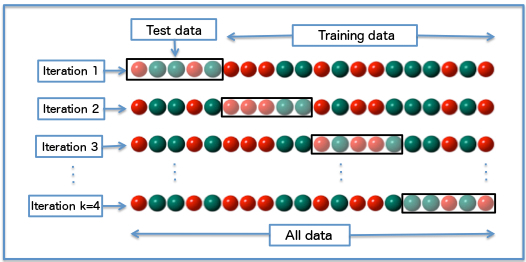

A classifier evaluation shows how accurate it is. Weka uses k-fold cross-validation for it. The following diagram gives a clear visual explanation of how it works.

Training data is used to teach the classifier, i.e. it already contains class values, while test data doesn’t.

Results

The output is:

Correctly Classified Instances 150 100 % Incorrectly Classified Instances 0 0 % Kappa statistic 1 Mean absolute error 0.0148 Root mean squared error 0.0606 Relative absolute error 3.33 % Root relative squared error 12.8585 % Total Number of Instances 150 Instance has been classified as VERSICOLOR with probability 100.0%.

Conclusions

Of course, this classification problem is simplified, real-world scenarios are much more complicated. They may require special preparation of a data set, using only the most important features, choosing the most appropriate classifier or implementing your own modification and so on. In this article I have been trying to show you a starting point to such kind of problems and provide useful wrappers which simplify development using Weka. Hope you’ve found something useful in it.